I am a 4th year CS PhD student at University College London (UCL) supervised by Edward Grefenstette and Tim Rocktäschel, as well as a PhD Researcher at FAIR London. My research focuses on deep reinforcement learning, world models, and LLM reasoning.

Before starting my PhD, I studied CS and Math at Rice University and also spent some time working on applied ML in the bay area.

Research Vision

My research studies how learning systems can acquire internal models of the world that support reasoning, planning, and generalization from limited experience. Humans do this naturally, whereas many modern learning systems—despite impressive gains from large-scale optimization—remain fragile when observations are partial, interaction is expensive, or task objectives change.

I focus on world models as a framework for understanding this gap, particularly in settings where information is limited, costly, or biased. Much of my work addresses the practical bottlenecks that arise when world models are used for decision-making in realistic environments, including data collection without rewards, long-horizon reasoning, and high-dimensional control. More broadly, I am interested in how learning under information constraints shapes the representations that models acquire, and how this can lead to improved robustness, generalization, and sample efficiency.

Publications

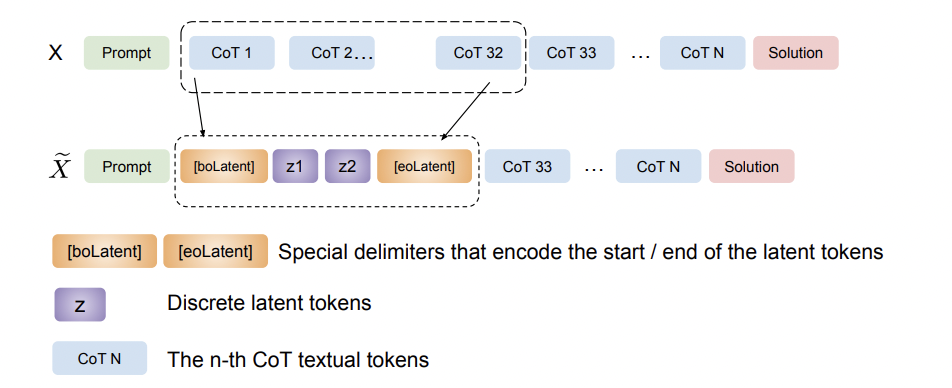

Token Assorted: Mixing Latent and Text Tokens for Improved Language Model Reasoning

Token Assorted: Mixing Latent and Text Tokens for Improved Language Model Reasoning

D. Su, H. Zhu, Y. Xu, J. Jiao, Y. Tian, Q. Zheng

ICML 2025

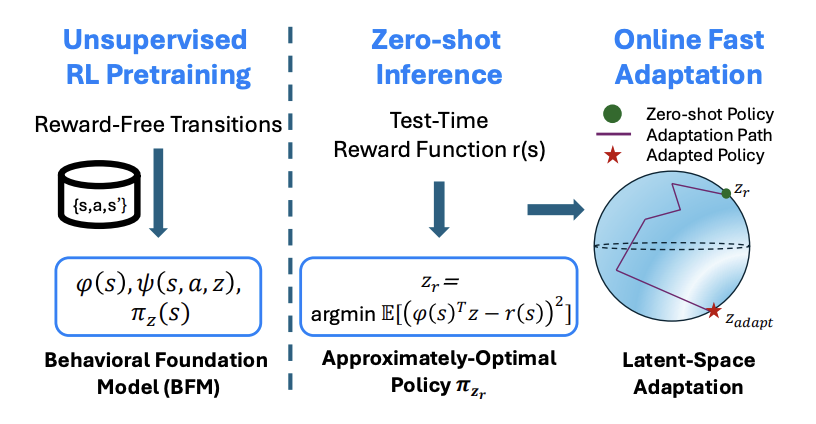

Fast Adaptation with Behavioral Foundation

Models

Fast Adaptation with Behavioral Foundation

Models

H. Sikchi, A. Tirinzoni, A. Touati, Y. Xu, A. Kanervisto, S. Niekum, A. Zhang, A. Lazaric, M. Pirotta

RLC 2025

Meta Motivo: Zero-Shot Whole-Body Humanoid Control via Behavioral Foundation Models

Meta Motivo: Zero-Shot Whole-Body Humanoid Control via Behavioral Foundation Models

A. Tirinzoni, A. Touati, J. Farebrother, M. Guzek, A. Kanervisto, Y. Xu, A. Lazaric, M. Pirotta

ICLR 2025

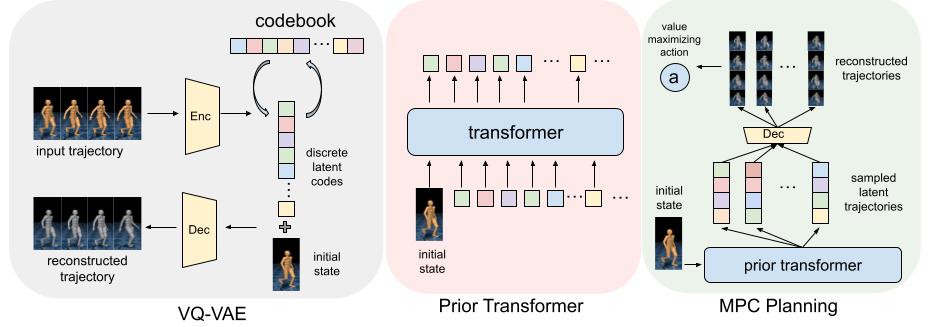

H-GAP: Humanoid Control with a Generalist Planner

H-GAP: Humanoid Control with a Generalist Planner

Z. Jiang*, Y. Xu*, N. Wagener, Y. Luo, M. Janner, E. Grefenstette, T. Rocktäschel, Y. Tian

ICLR 2024 Spotlight

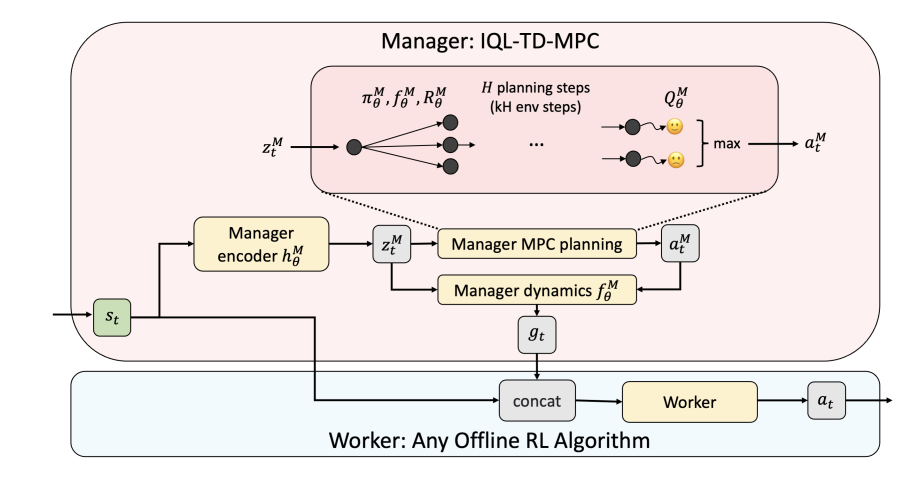

IQL-TD-MPC: Implicit Q-Learning for Hierarchical Model Predictive Control

IQL-TD-MPC: Implicit Q-Learning for Hierarchical Model Predictive Control

R. Chitnis*, Y. Xu*, B. Hashemi, L. Lehnert, U. Dogan, Z. Zhu, O. Delalleau

ICRA 2024

Learning General World Models in a Handful of Reward-Free Deployments

Learning General World Models in a Handful of Reward-Free Deployments

Y. Xu*, J. Parker-Holder*, A. Pacchiano*, P. J. Ball*, O. Rybkin, S. J. Roberts, T. Rocktäschel, E. Grefenstette

NeurIPS 2022

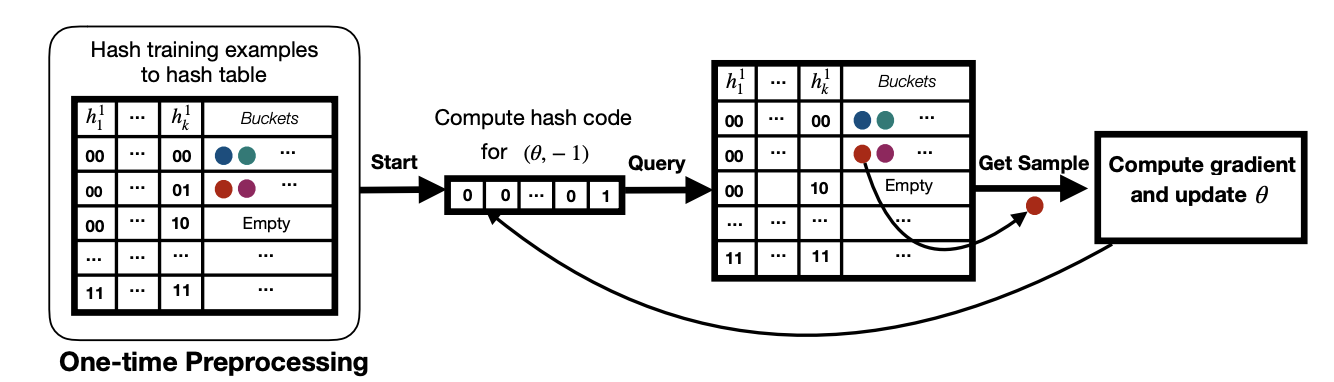

LGD: Fast and Accurate Stochastic Gradient Estimation

LGD: Fast and Accurate Stochastic Gradient Estimation

B. Chen, Y. Xu, A. Shrivastava

NeurIPS 2019

Looking into the past: Eye-tracking mental simulation in physical inference

Looking into the past: Eye-tracking mental simulation in physical inference

A. Beller, Y. Xu, S. Linderman, T. Gerstenberg

Cognitive Science 2022